BEDA, Simon Hermann

The EU AI Act: What’s in Force – and what’s Still in Flux?

The EU’s Artificial Intelligence Act (AI Act) is entering a decisive phase. While parts of the law are already in force, the European Commission is currently discussing whether to delay some of the more complex rules. The reasons are largely practical: technical standards are not ready, and many companies—especially small ones—need more time to prepare.

Here’s a clear overview of what the AI Act is, what’s already in effect, and what might change.

What is the EU AI Act?

The AI Act is the first comprehensive law for artificial intelligence in the world, adopted by the EU in August 2024. Its goal is to make sure that AI systems used in the EU are safe, transparent, and respect fundamental rights—without slowing down innovation.

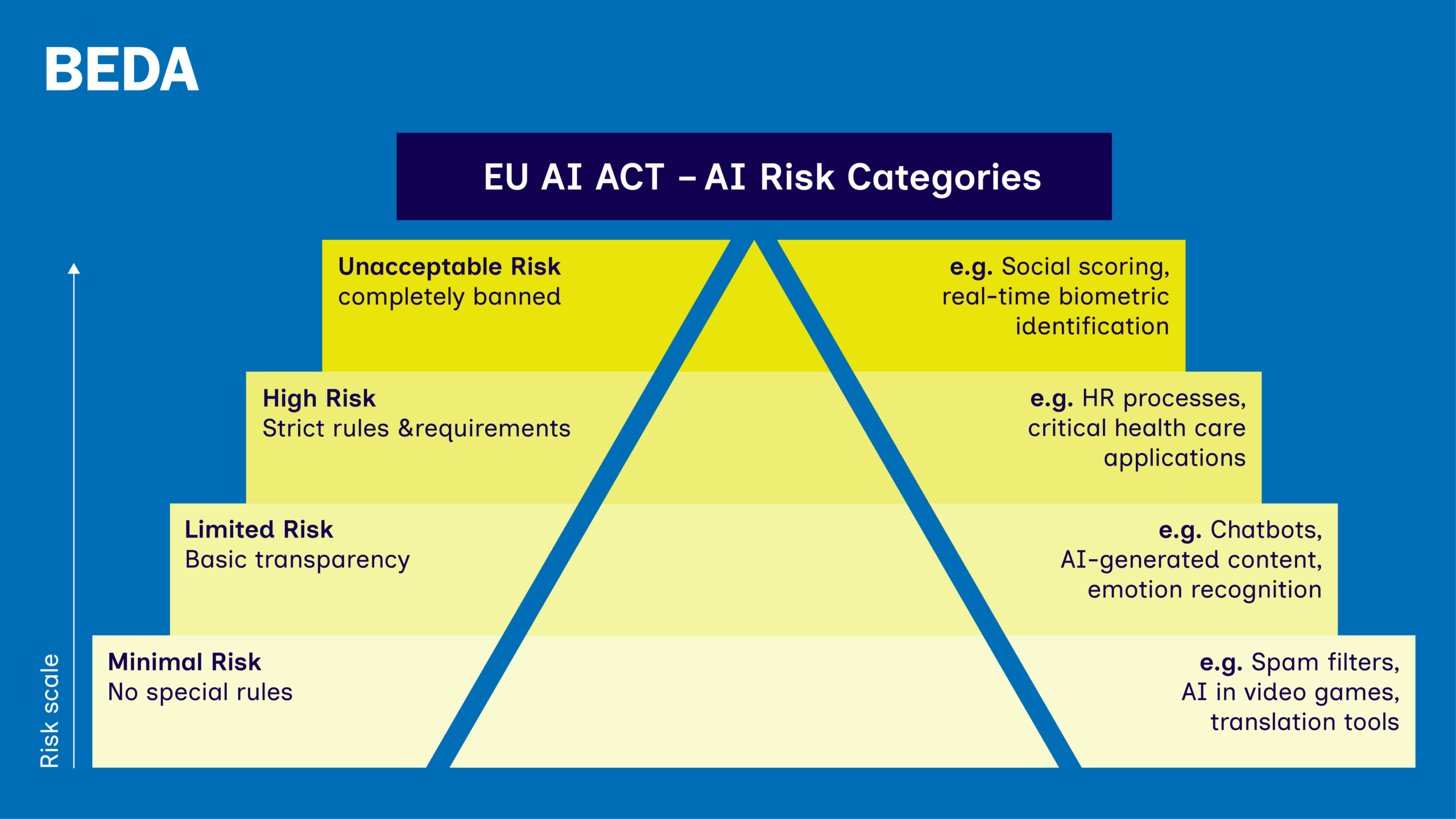

To do this, the Act applies a risk-based framework, classifying AI systems based on their potential impact on individuals, society, and fundamental rights.

Risk categories:

- Unacceptable risk: completely banned (e.g. social scoring)

- High risk: strict rules and requirements (e.g. AI in healthcare, policing, recruitment)

- Limited risk: basic transparency obligations (e.g. chatbots)

- Minimal risk: no special rules (e.g. games or spam filters)

Which rules are already in effect?

Since February 2025, the first provisions of the AI Act have been in force. These include:

- A ban on AI systems deemed to pose an unacceptable risk

- Initial requirements for general-purpose AI models, such as documentation and transparency

- Obligations for providers to start preparing for compliance, especially if operating in high-risk sectors

What are the current discussions about?

The next major phase of the Act—rules for high-risk AI systems—is scheduled for August 2026. But there are growing concerns that this timeline may not be realistic.

The main reasons are:

- Delayed technical standards: These were originally expected by mid-2025 but will likely not be finalised until 2026

- Compliance concerns: Especially for small and medium-sized enterprises (SMEs), which may face disproportionate burdens under the current schedule

- A proposal led by Poland suggests postponing specific obligations and introducing more exemptions for low-complexity systems

Because of these issues, the European Commission and some Member States—led by Poland—are proposing to postpone some obligations and allow more flexibility for smaller or low-risk systems. So far, no official decision has been made, but discussions are ongoing.

What should organisations do now?

Despite the possibility of delays, the overall direction of the AI Act remains unchanged. Organisations developing or deploying AI systems in the EU should:

- Continue preparing for compliance, especially if their systems fall into the high-risk category

- Stay updated on regulatory changes and the final publication of harmonised technical standards

- Review their AI governance frameworks and documentation practices early

Why is this important?

Regina Hanke from Deutscher Designtag (also BEDA Secretary) points out that the AI Act represents a significant milestone, particularly in the potential protection of copyright and intellectual property rights for designers and creators. However, further refinement is essential—especially to ensure a clear, consistent, and transnational interpretation across Europe.

‘A harmonised understanding is key to delivering coherent legal application throughout the EU. Looking ahead, such clarity will help maintain Europe’s competitive edge in a global landscape increasingly shaped by AI-driven innovation. This is not just about regulating AI in Europe—it’s about positioning Europe as a global leader in trustworthy AI.’

– Regina Hanke (BEDA Secretary, Deutscher Designtag)